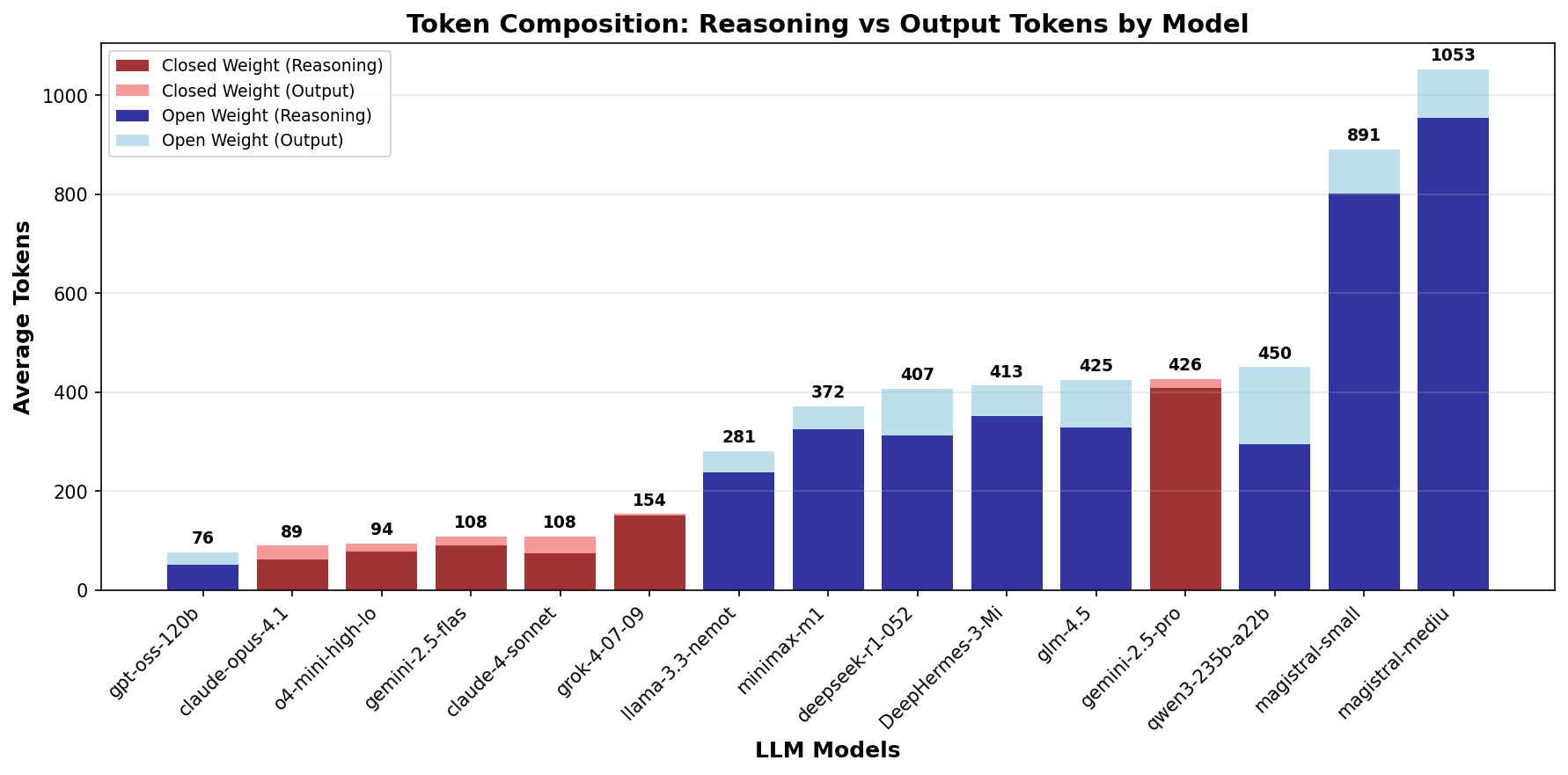

A recent study by Nous Research highlights that open-source AI models require significantly more computational resources than their closed-source counterparts, which could affect their cost benefits and alter corporate AI strategies. The research revealed that open-weight models consume between 1.5 to 4 times more tokens—the foundational units of AI computation—than closed models like OpenAI and Anthropic for similar tasks, with simple questions sometimes needing up to 10 times more tokens.

The study emphasizes that although running open-source models is cheaper per token, their overall cost-efficiency is compromised if they need many tokens for problem-solving. Researchers analyzed 19 AI models on tasks including basic questions, math problems, and logic puzzles, finding that lack of token efficiency leads to increased costs, even if per-token pricing is lower.

OpenAI’s o4-mini and gpt-oss models stood out in terms of token efficiency, especially for math tasks, using significantly fewer tokens than other models. In contrast, certain open-source models displayed high token use, rendering them less economical. Closed-source models are often optimized to reduce token use, unlike open-source models, which may sacrifice efficiency for improved reasoning performance.

The study called for a focus on token efficiency alongside accuracy in future AI development and made its data available for further research. In today’s cost-sensitive AI landscape, the study suggests that the efficiency of AI models could become as critical as their intelligence.