Software developers primarily spend their time on activities other than coding. Recent industry research indicates that coding comprises only 16% of their hours, with most…

View More Developers Lose Focus 1,200 Times Daily — How MCP Could Transform ThatTag: large language models

Exposed by the Em Dash — AI’s Beloved Punctuation Mark and How It’s Revealing Your Secrets

Let’s discuss the em dash. Not the humble hyphen or its more confident cousin, the en dash. I’m referring to the ‘EM dash,’ that long,…

View More Exposed by the Em Dash — AI’s Beloved Punctuation Mark and How It’s Revealing Your SecretsOpenCUA’s Open Source Agents Compete with Proprietary Models from OpenAI and Anthropic

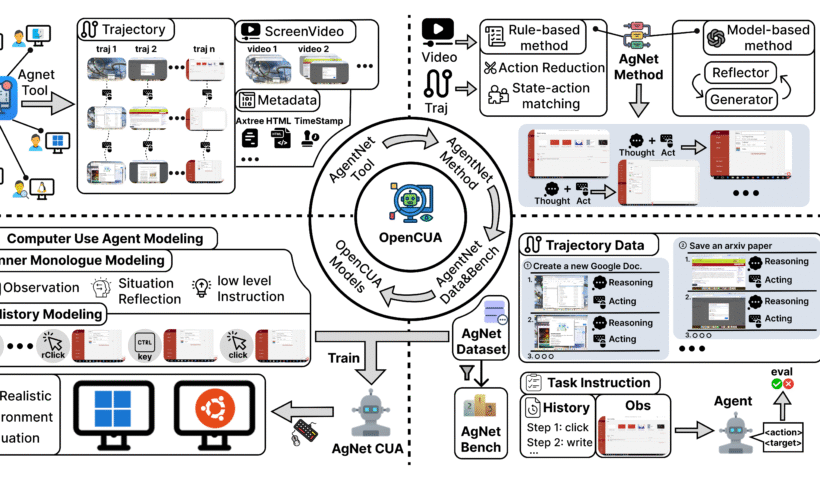

Researchers at The University of Hong Kong (HKU) and collaborators have developed OpenCUA, an open-source framework to create robust AI agents for computer operation. OpenCUA…

View More OpenCUA’s Open Source Agents Compete with Proprietary Models from OpenAI and AnthropicByteDance, TikTok’s parent, unveils new open-source Seed-OSS-36B model with 512K token context.

The company’s Seed Team of AI researchers today released Seed-OSS-36B on AI code sharing website Hugging Face. Seed-OSS-36B is a new line of open-source, large…

View More ByteDance, TikTok’s parent, unveils new open-source Seed-OSS-36B model with 512K token context.LLMs Produce ‘Fluent Nonsense’ When Operating Beyond Their Training Bounds

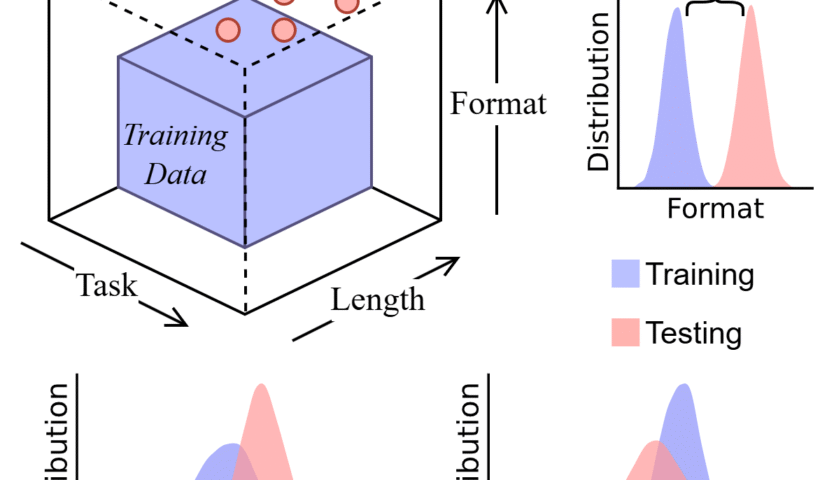

Researchers at Arizona State University have released a new study challenging the idea that Chain-of-Thought (CoT) reasoning in Large Language Models (LLMs) indicates genuine intelligence.…

View More LLMs Produce ‘Fluent Nonsense’ When Operating Beyond Their Training BoundsHugging Face: 5 Ways Enterprises Can Reduce AI Costs Without Compromising Performance

Enterprises often believe AI models inherently need substantial computing power, leading them to seek out more resources. Sasha Luccioni from Hugging Face suggests a shift…

View More Hugging Face: 5 Ways Enterprises Can Reduce AI Costs Without Compromising PerformanceGEPA Enhances LLMs Without Expensive Reinforcement Learning

Researchers from the University of California, Berkeley, Stanford University, and Databricks have introduced a new AI optimization method called GEPA that significantly surpasses traditional reinforcement…

View More GEPA Enhances LLMs Without Expensive Reinforcement LearningLLMs for Beginners

Under the concept of “you can only fear what you don’t understand,” I’m starting a blog series to discuss AI, particularly Large Language Models (LLMs),…

View More LLMs for BeginnersDesigning Feedback Loops: Enhancing LLMs to Improve Continuously

Large language models (LLMs) excel at reasoning, generating, and automating, but transforming a demo into a sustainable product requires the system to learn from actual…

View More Designing Feedback Loops: Enhancing LLMs to Improve ContinuouslyThe Emergence of Intuitive Apps: Why Post-2026 SaaS Must Cater to Your Needs

The future of B2B software is not just about AI; it’s about being conversation-driven. It’s time for Vibeable SaaS, where you express your desired outcomes…

View More The Emergence of Intuitive Apps: Why Post-2026 SaaS Must Cater to Your Needs