Researchers recently released a comprehensive survey on “OS Agents” — AI systems capable of autonomously controlling digital interfaces. Published in the Association for Computational Linguistics conference, this academic review highlights a rapidly evolving field fueled by investments from major tech companies. “With advancements in (multimodal) large language models, creating AI assistants like Iron Man’s J.A.R.V.I.S is within reach,” the researchers state.

This survey, led by Zhejiang University and OPPO AI Center, comes as tech giants introduce AI systems designed to automate interactions. OpenAI’s “Operator,” Anthropic’s “Computer Use,” Apple’s “Apple Intelligence,” and Google’s “Project Mariner” are notable initiatives. The research indicates a surge in models and frameworks for computer control, with publication rates rapidly increasing since 2023.

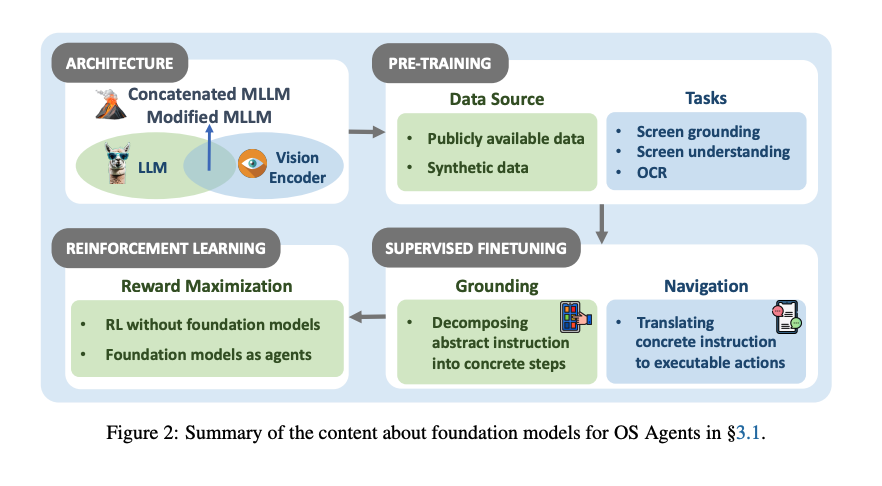

Significantly, the development of AI systems capable of understanding and manipulating the digital world like humans is underway. Current systems use advanced computer vision to comprehend and interact with interfaces, autonomously executing tasks quickly and efficiently. “OS Agents could substantially enhance user experiences, handling tasks like online shopping and travel arrangements seamlessly,” the researchers note.

The sophistication of these systems allows them to perform complex workflows across applications, reducing human input to seconds. However, the emergence of AI-controlled corporate systems brings new security challenges. These systems open novel attack vectors, with risks like “Web Indirect Prompt Injection” and “environmental injection attacks” being significant concerns.

Performance analysis of these AI systems reveals limitations, particularly with complex tasks. While successful at simple operations, AI agents struggle with workflows requiring sustained reasoning. This gap suggests current systems are best suited for routine tasks rather than replacing human judgment in complex scenarios.

The most intriguing challenge lies in personalization and self-evolution, with future OS agents expected to adapt to user preferences. This capability could revolutionize interactions, but also pose significant privacy risks. The race to develop AI agents that mimic human usability intensifies, with fundamental challenges in security, reliability, and personalization still present.

The trajectory of AI agents transforming digital interactions is set, with the pressing question being whether we are prepared for the implications. As technology rapidly advances, the imperative to establish robust security and privacy frameworks grows ever more urgent.