Shadow AI is the $670,000 issue many organizations aren’t aware they have.

IBM’s 2025 Cost of a Data Breach Report, released with the Ponemon Institute, reveals that breaches involving unauthorized AI tool use by employees cost organizations $4.63 million on average, nearly 16% more than the global average of $4.44 million.

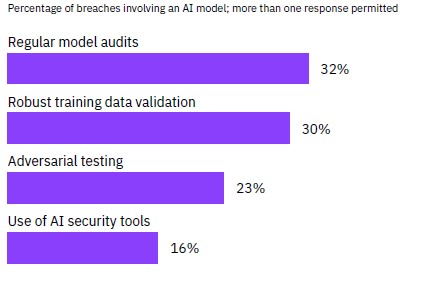

The research, based on 3,470 interviews across 600 breached organizations, shows how quickly AI adoption outpaces security oversight. Although only 13% of organizations reported AI-related security incidents, 97% of those breached lacked proper AI access controls. Another 8% weren’t sure if they’d been compromised through AI systems.

“The data indicates a gap between AI adoption and oversight, which threat actors are starting to exploit,” said Suja Viswesan, Vice President of Security and Runtime Products at IBM. “The report revealed a lack of basic access controls for AI systems, leaving highly sensitive data exposed and models vulnerable to manipulation.”

The AI Impact Series Returns to San Francisco – August 5

The next phase of AI is here – are you ready? Join leaders from Block, GSK, and SAP for an exclusive look at how autonomous agents are reshaping enterprise workflows – from real-time decision-making to end-to-end automation.

Secure your spot now – space is limited: https://bit.ly/3GuuPLF

Shadow AI and supply chains as attack vectors

The report indicates that 60% of AI-related security incidents resulted in compromised data, while 31% disrupted daily operations. Customers’ personally identifiable information (PII) was exposed in 65% of shadow AI incidents, compared to the 53% global average. One major weakness in AI security is governance, with 63% of breached organizations lacking or still developing AI governance policies.

“Shadow AI is like doping in the Tour de France; people want an edge without realizing the long-term consequences,” stated Itamar Golan, CEO of Prompt Security to VentureBeat. His company catalogs over 12,000 AI apps and detects 50 new ones daily.

VentureBeat reports that adversaries’ methods outpace current defenses against software and model supply chain attacks. It’s not surprising that the report found supply chains as the primary attack vector in AI security incidents, with 30% involving compromised apps, APIs, or plug-ins, making it the most common cause of AI security incidents.

Weaponized AI is proliferating

All forms of weaponized AI, including LLMs designed to enhance attacks, are spreading rapidly. Sixteen percent of breaches involve attackers using AI, primarily for AI-generated phishing (37%) and deepfake attacks (35%). Models like FraudGPT, GhostGPT, and DarkGPT are available for as little as $75 a month and are designed for strategies like phishing, exploit generation, code obfuscation, vulnerability scanning, and credit card validation.

The more fine-tuned an LLM is, the greater the chance it can be directed to produce harmful outputs. Cisco’s