In 1956, during a conference at Dartmouth College, computing scientists, including John McCarthy, who coined the term “artificial intelligence,” gathered to discuss creating machines capable of human-like language comprehension, problem-solving, and self-improvement. A key focus was enabling machines to exhibit creativity and originality, a challenging endeavor. Psychologists at the time struggled to define human creativity beyond mere intelligence. The Dartmouth group suggested that creativity involves randomness guided by intuition. Today, after decades of development, AI models align with this idea. While large language models have advanced text generation, diffusion models are making significant impacts in creative areas by converting random noise into coherent outputs, like images and music, often indistinguishable from human creations.

Now, these models are entering music, a particularly vulnerable creative field, with AI-generated works infiltrating our lives through streaming services and playlists. The debate about AI’s creative authenticity, prevalent in visual arts, is now affecting music. Music evokes emotional responses, challenging our understanding of authorship and originality. Courts are dealing with these legal complexities, with record labels suing AI companies for allegedly replicating human art without compensation. These debates force us to reconsider human creativity. Is it merely statistical learning with randomness, or is there an inherently human aspect to it? The sensation of being moved by an AI-generated song prompts us to question the essence of creativity.

Following the Dartmouth conference, AI pioneers pursued foundational technologies, while cognitive scientists explored human creativity. A definition eventually emerged: creative works are both novel and useful. The ’90s saw advances like fMRI, revealing the brain’s creative processes, spanning idea generation, evaluation, and associative thinking. However, AI creativity deviates from human brain inspiration, as seen in diffusion models developed a decade ago. These simulate randomness reversal, producing coherent forms by de-noising.

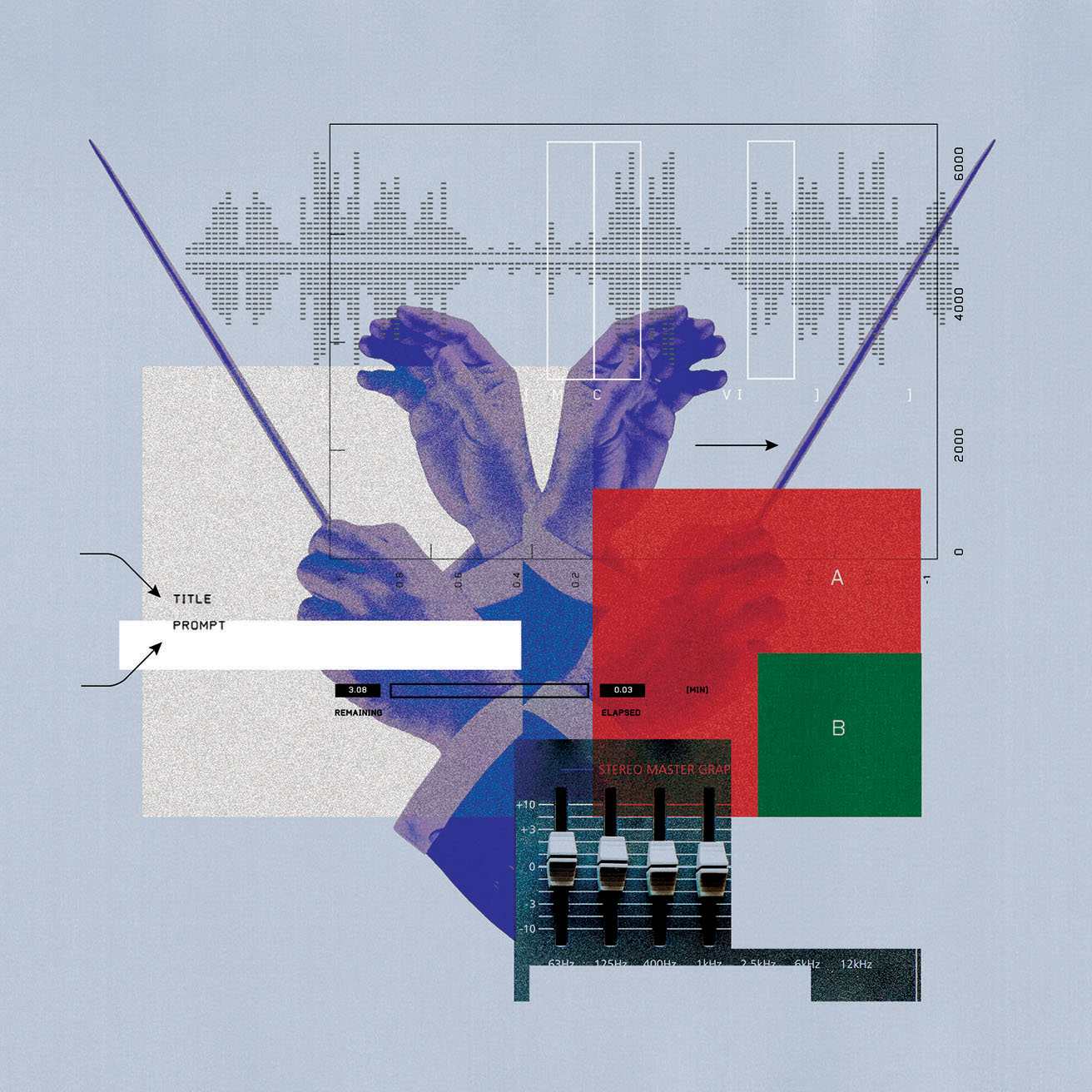

In music, diffusion models don’t “compose” traditionally but generate complete tracks simultaneously through visual waveforms, resembling audio signals on a record. Both AI music companies like Udio and Suno aim to democratize music creation, with AI model outputs treated as art, blurring creation and replication lines. Legal challenges abound, as record labels litigate over training copyrighted music without compensation. Yet AI music is poised for growth, bolstered by training data quality, model architecture, and effective prompting.

AI music will likely thrive, prompting questions of quality and authenticity. While AI outputs rely on human training data, innovation could see models training on their outputs. As AI technology becomes more common, randomness adds intrigue to outputs, akin to early conference suggestions. Yet, human creativity is defined by anomaly amplification, where unexpected elements transform art. This uniqueness, as demonstrated by human artists, challenges AI’s statistical sampling approach.

Exploring Udio’s model highlights AI’s potential. Despite indistinguishable AI-generated tracks, originality remains elusive. The nuance in human creativity—through surprises and anomalies—is unparalleled. The AI music debate prompts us to reassess value and authenticity. While AI artistry endures legal and cultural scrutiny, its future influence is inevitable. Whether AI creation usurps human composition’s emotional depth remains for us, as listeners, to decide. As AI advances, questions of human presence behind art become central, challenging perception and appreciation boundaries.