Deep Cogito, a San Francisco-based AI research startup founded by former Googlers, has unveiled four new large language models (LLMs) designed to improve their reasoning abilities over time. These models, part of the Cogito v2 family, range from 70 billion to 671 billion parameters and are available under various licensing terms. The lineup includes both dense and MoE models, each catering to different needs. Dense models, such as the 70B and 405B variants, activate all parameters on each pass, making them suitable for low-latency applications and environments with limited GPU capacity. In contrast, MoE models like the 109B and 671B versions use a sparse routing mechanism to maintain larger model sizes without a proportional increase in computational cost, making them ideal for high-performance inference tasks, complex reasoning research, or applications requiring frontier-level accuracy at lower runtime expense.

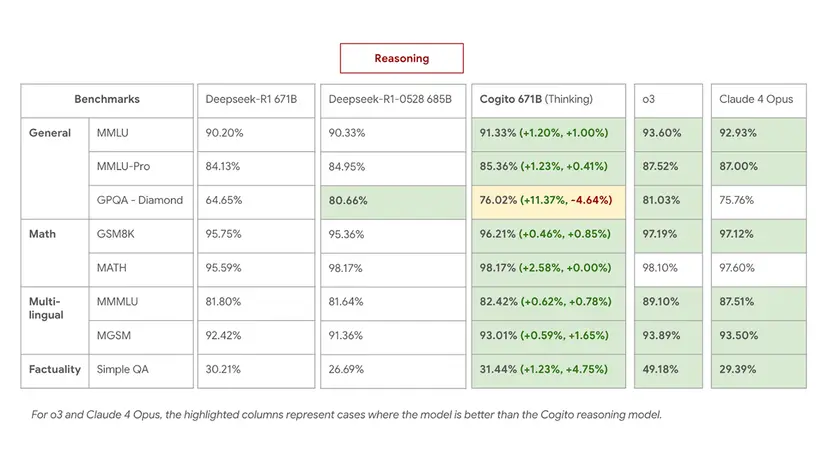

The flagship 671B MoE model in Cogito v2 outperforms leading open models on benchmarks by leveraging its scale and routing efficiency while using shorter reasoning chains. The models are available on Hugging Face for download, Unsloth for local use, or via APIs from Together AI, Baseten, and RunPod for those who cannot host inferences independently. An “8-bit floating point (FP8)” version of the 671B model further enhances accessibility by speeding up operations on more accessible hardware with minimal performance loss.

All four models in the Cogito v2 family are designed as hybrid reasoning systems capable of immediate response or internal reflection when necessary. This reflective capability is integrated into their training, enabling the models to distill their reasoning processes back into their weights over time, thus learning which thought paths are valuable. This approach enhances their reasoning efficiency and general performance. Deep Cogito has been developing and releasing models since emerging from stealth mode in April 2025, backed by a $13 million seed funding round. Their core method, iterated distillation and amplification (IDA), emphasizes evolving insights within the model, eschewing static instructions or prompts.

The models have demonstrated significant performance improvements on internal benchmarks, especially in complex reasoning tasks, and are claimed to outperform models from competitors. Despite their size, Deep Cogito has managed to train its models efficiently with significantly lower costs compared to other major models on the market. The company is committed to open-source releases and iterative development, advancing the concept of machine intuition by integrating reasoning into the core intelligence of the models. This new generation of models signals a shift towards more efficient, self-improving AI capabilities.